At a Glance

- OpenAI will release ChatGPT Health in coming weeks, a walled-off version for medical queries

- Over 230 million people already ask ChatGPT health questions each week

- Doctors warn the bot can mislead patients with hallucinated statistics

- Why it matters: Users may skip human clinicians and act on AI-generated errors

OpenAI’s new ChatGPT Health promises tighter privacy and medical safeguards, yet doctors report patients arriving with dangerously wrong printouts from the standard chatbot.

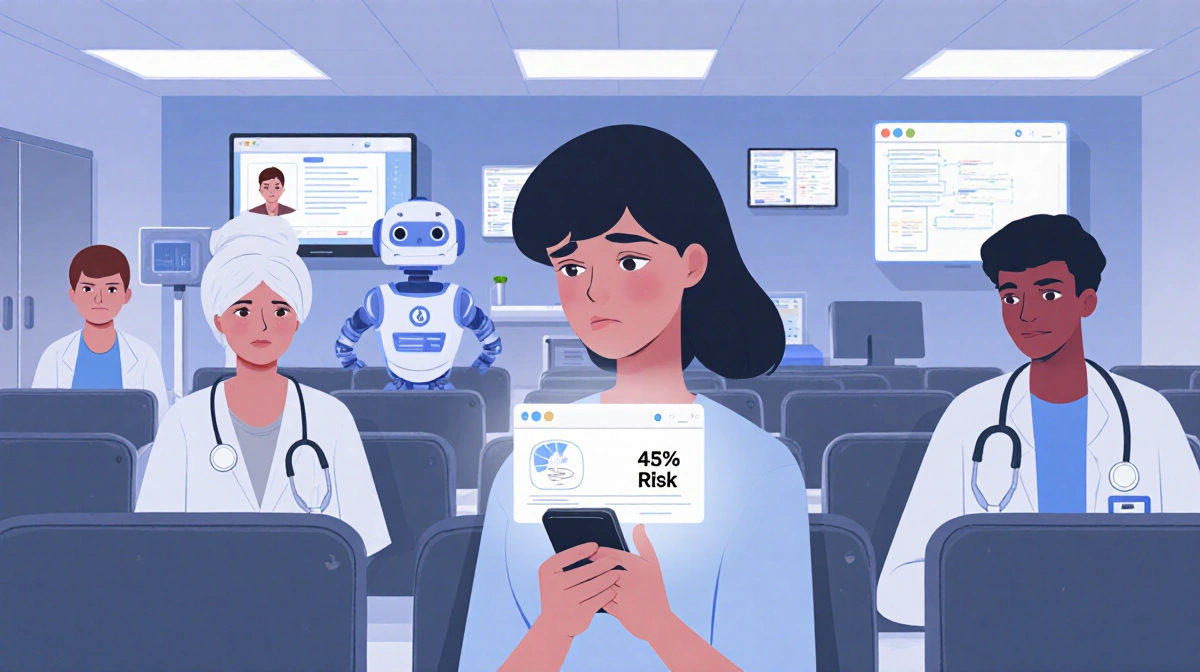

Patient Frightened by False 45% Risk

Dr. Sina Bari, a surgeon and AI healthcare lead at iMerit, told News Of Philadelphia that one patient refused a prescribed medication after ChatGPT claimed it carried a 45% pulmonary-embolism risk. When Dr. Bari traced the figure, he found it came from a tuberculosis-subgroup study irrelevant to his patient.

Despite such mishaps, Dr. Bari welcomed the upcoming ChatGPT Health release because conversations will be ring-fenced from training data and include privacy controls.

230 Million Weekly Users Drive Formal Health Mode

Andrew Brackin, health-tech investor at Gradient, told News Of Philadelphia that health questions are already “one of the biggest use cases” for ChatGPT, so a dedicated, secure channel makes sense.

Planned features include:

- Upload of personal medical records

- Syncing with Apple Health and MyFitnessPal

- Opt-out of data retention

HIPAA Gap Sparks Regulatory Questions

Itai Schwartz, co-founder of MIND, warned that data could flow “from HIPAA-compliant organizations to non-HIPAA-compliant vendors,” leaving regulators to decide how to police the transfers.

Hallucination Rates Remain High

Vectara’s benchmark shows GPT-5 hallucinates more than rival Google and Anthropic models, a red flag for life-or-death advice.

Six-Month Wait Pushes Patients Toward Bots

Dr. Nigam Shah of Stanford Health Care argues accessibility is the bigger crisis: typical waits for a primary-care visit reach three to six months. Given that bottleneck, many prefer instant-if imperfect-AI answers.

Doctors Eye Admin Relief Over Patient Chat

Stanford’s ChatEHR project embeds AI inside electronic health records so clinicians can surface patient data faster. Early tester Dr. Sneha Jain says the tool “can help them get that information up front so they can spend time on what matters-talking to patients.”

Anthropic this week pitched a similar provider-side approach with Claude for Healthcare, claiming prior-authorization paperwork that eats “20, 30 minutes” per case could be slashed through automation.

Core Tension: Patient Welfare vs. Shareholder Returns

Dr. Bari summarized the uneasy alliance: “Patients rely on us to be cynical and conservative in order to protect them,” while tech firms answer to shareholders.

Key Takeaways

- ChatGPT Health arrives soon with privacy walls, but accuracy risks persist.

- Millions already treat AI as a first-line doctor; formalizing the practice may reduce data leakage yet not curb hallucinations.

- Many clinicians prefer AI that handles back-office tasks rather than patient-facing triage, hoping to free up human time for actual care.