At a Glance

- Meta’s Oversight Board is reviewing its first-ever case on permanent account bans

- The case involves a high-profile Instagram user who repeatedly violated Community Standards

- Meta wants guidance on fair enforcement, transparency, and protecting public figures from abuse

- Why it matters: The decision could reshape how millions of users appeal bans and how Meta handles repeat offenders

Meta has asked its external Oversight Board to weigh in on how the company issues permanent account bans, marking the first time in the Board’s five-year history that it has examined the policy that locks users out of their profiles for good.

The referral centers on a high-profile Instagram account that Meta disabled after the user posted visual threats of violence against a female journalist, anti-gay slurs aimed at politicians, a depiction of a sex act, and allegations of misconduct against minorities. Although the account had not accumulated enough strikes to trigger an automatic removal, Meta chose to impose a permanent ban.

According to News Of Philadelphia, the Board’s case materials did not identify the account, but the eventual recommendations could set precedents for:

- Users who target public figures with abuse, harassment, or threats

- People who lose access without receiving clear explanations

- Creators and businesses that rely on the platform for income and audience reach

Meta included five posts from the year prior to the ban when it referred the matter. In a public statement accompanying the case, the company said it wants the Board’s input on six questions:

- How can permanent bans be processed and reviewed fairly?

- Are current tools effective at protecting journalists and public figures from repeated abuse?

- What challenges arise when trying to identify off-platform content?

- Do punitive measures actually change user behavior?

- What best practices should guide transparent reporting on enforcement decisions?

- How should the company balance safety, voice, and consistency?

The request arrives after a surge of user complaints about mass bans with little detail. Facebook Groups and individual users have reported sudden suspensions, and some have paid for Meta Verified customer support only to find the service unhelpful when appealing bans.

Oversight Board’s limited reach

The Board can overturn individual content decisions and issue policy recommendations, yet it cannot force Meta to adopt broader policy changes. It was not consulted when CEO Mark Zuckerberg relaxed hate-speech restrictions last year, underscoring its advisory role.

Deliberations can also be slow; the Board accepts relatively few cases compared with the millions of moderation decisions Meta makes monthly. Critics question whether the body has meaningful influence over the social networking giant.

Track record so far

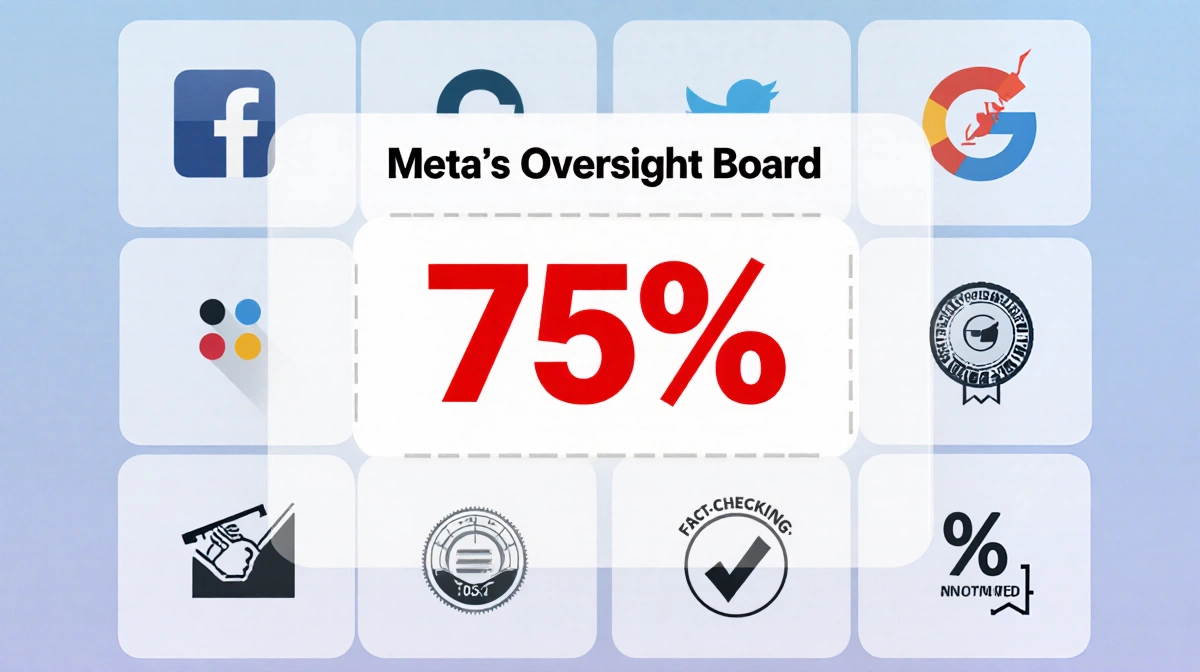

A December report showed Meta has implemented 75% of more than 300 Board recommendations to date, and the company has consistently followed the Board’s content-moderation rulings. Separately, Meta recently asked for feedback on its crowdsourced fact-checking feature, Community Notes.

Once the Oversight Board issues its opinion, Meta has 60 days to respond publicly. The Board is accepting public comments on the case, but submissions cannot be anonymous.