> At a Glance

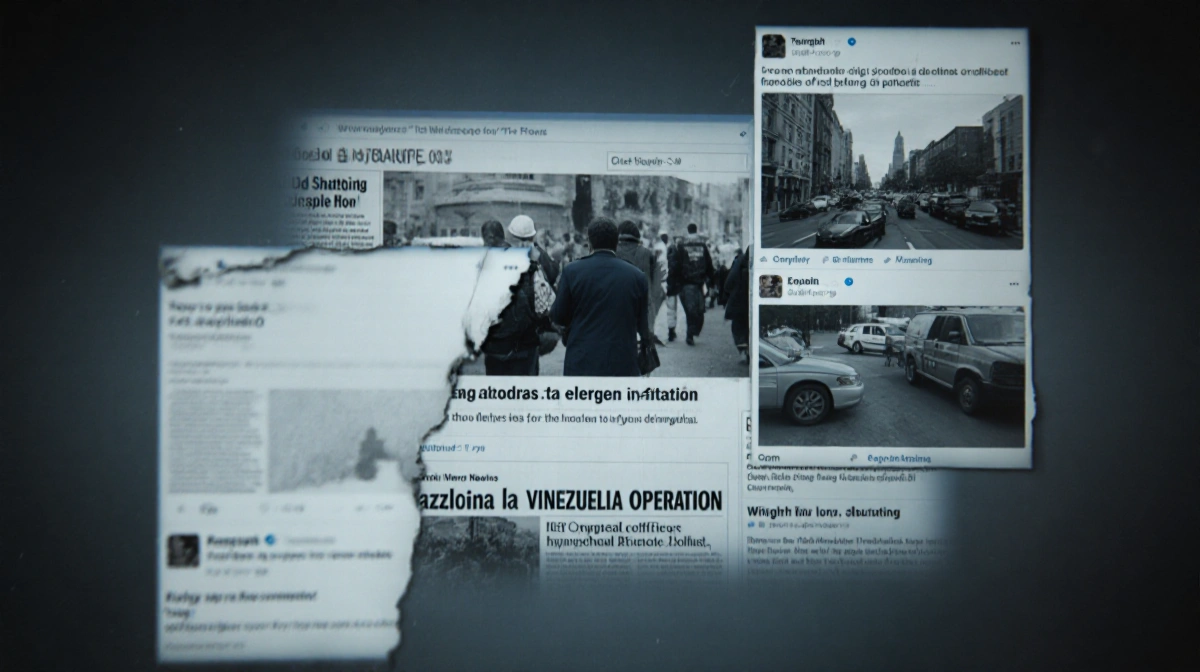

> – AI-generated images of President Trump’s Venezuela operation spread within the first week of 2026

> – A fake, likely AI-edited image of an ICE shooting scene circulated alongside real footage

> – Experts warn that soon no image or video can be trusted at a glance

> – Why it matters: Eroding trust in digital content threatens public safety, justice, and informed debate

The first days of 2026 have exposed a new reality: photos and videos can no longer be taken at face value. As AI tools grow sharper, fabricated visuals now surface faster than facts, leaving viewers unsure what-and who-to believe.

The Venezuela Operation Spark

On Saturday, President Trump posted a photo of deposed Venezuelan leader Nicolás Maduro blindfolded on a Navy ship. Within hours, unverified images and AI-generated videos claiming to show his capture flooded social platforms. Elon Musk shared one such clip that appeared artificially produced, amplifying the confusion.

- AI-edited frames removed an ICE officer’s mask after a fatal shooting in Texas

- Viral posts mixed archival footage with fresh AI creations to stoke emotion

- Platform algorithms rewarded recycled and synthetic content with wider reach

The Trust Collapse

Jeff Hancock, founding director of the Stanford Social Media Lab, says the default belief in digital media is crumbling:

> Jeff Hancock

> “For a while people are really going to not trust things they see in digital spaces.”

Hany Farid’s recent deepfake-detection study found viewers are now as likely to label real content fake as they are to spot actual fakes. Political material worsens the error rate because confirmation bias kicks in.

| Scenario | Misidentification Rate |

|---|---|

| Neutral clips | ~35% |

| Politically charged clips | ~50% |

Renee Hobbs at the University of Rhode Island warns the mental load of constant skepticism leads many to disengage entirely:

> Renee Hobts

> “If constant doubt is the norm, disengagement is a logical response… the danger is the collapse of even being motivated to seek truth.”

Detection Arms Race

Old tips like counting fingers on AI hands are fading as generators improve. Computer-vision experts say pixel-level clues will soon vanish, making perfect fakes inevitable.

Adam Mosseri, head of Instagram, admitted in a Threads post:

> Adam Mosseri

> “For most of my life I could safely assume…photographs or videos…are largely accurate…This is clearly no longer the case.”

Siwei Lyu, who helps run the open-source DeepFake-o-meter, urges users to weigh context over pixels:

> Siwei Lyu

> “Common awareness and common sense are the most important protection measures we have.”

Because people instinctively trust familiar faces, AI likenesses of celebrities, relatives, or officials will keep spreading unless audiences question why content appears and who shares it.

Key Takeaways

- AI-generated evidence already influences court cases and fools government officials

- The OECD will roll out a global AI-literacy test for 15-year-olds in 2029

- Media literacy educators are racing to fold generative-AI lessons into curricula

- Simple habits-pausing to verify sources-remain the strongest everyday defense

Until platforms, regulators, and users adapt, every viral image will carry a silent question: is this moment real?