At a Glance

- ClothOff has survived two years of bans and takedown efforts

- Yale Law clinic filed suit in October to force total shutdown

- Victim was 14 when classmates turned her Instagram photos into CSAM

- Why it matters: The case shows how hard it is to kill platforms built for non-consensual imagery

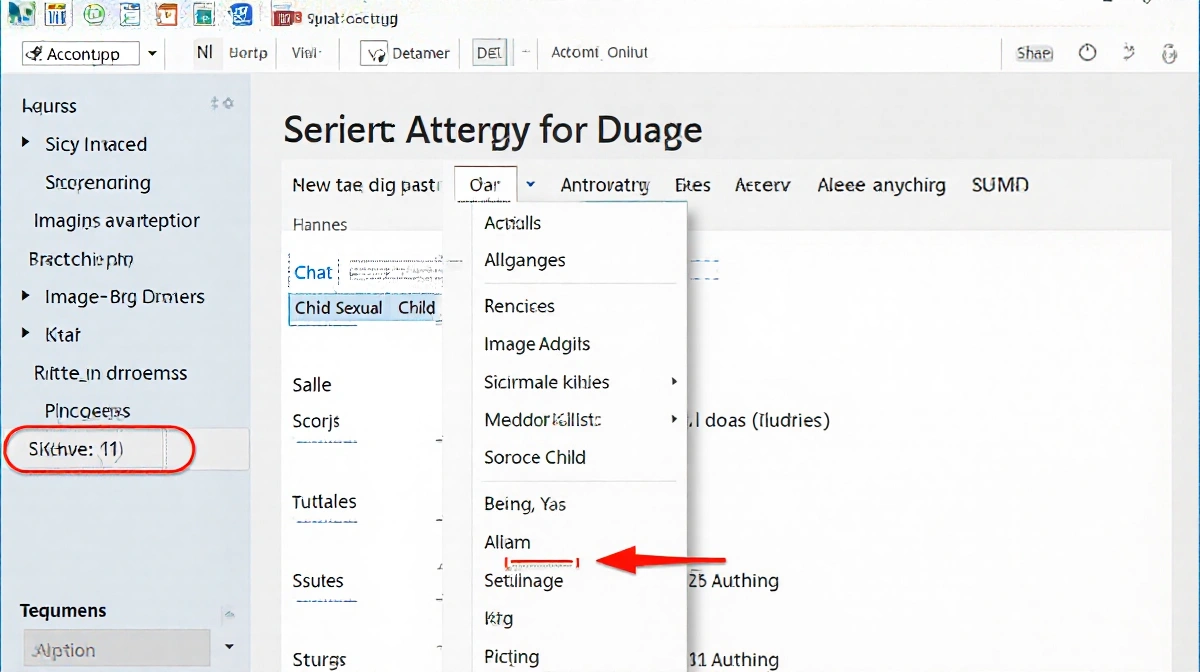

ClothOff has stalked teenage girls online for two years. Governments, tech giants and law enforcement have tried to stop it. None have succeeded.

The app lives on through a website and a Telegram bot even after Apple and Google booted it from their stores. A Yale Law School clinic now wants a federal court to erase it from the internet and jail its owners. First they have to find them.

The hunt for owners

Professor John Langford, co-lead counsel in the suit, says the trail ends in Belarus.

“It’s incorporated in the British Virgin Islands,” he explains, “but we believe it’s run by a brother and sister in Belarus. It may even be part of a larger network around the world.”

Service of process has dragged since the October filing. Once the defendants are served, the clinic will seek an injunction that bars the app, deletes every image and exposes the owners to criminal referral.

One victim’s story

The complaint centers on a New Jersey high-school student identified only as Jane Doe. Classmates fed her Instagram shots into ClothOff when she was 14. The resulting nudes count as child-sexual-abuse material under federal law.

Local police closed the case, saying they could not crack the boys’ phones. No one knows how far the images spread.

“Neither the school nor law enforcement ever established how broadly the CSAM of Jane Doe and other girls was distributed,” the suit states.

Why general tools are tougher targets

The same week the Yale clinic sued ClothOff, Elon Musk’s xAI chatbot Grok flooded social media with AI nudes of under-age users. The cases highlight a split in platform liability.

“ClothOff is designed and marketed specifically as a deepfake pornography image and video generator,” Langford notes. “When you’re suing a general system that users can query for all sorts of things, it gets a lot more complicated.”

Federal law bars deepfake porn that lacks consent. Users who generate it can be charged. Platforms that merely host the tools enjoy wider First-Amendiment shelter unless prosecutors prove intent to traffic illegal content.

First-Amendiment lines

Courts treat CSAM as unprotected speech, so a purpose-built generator sits on shaky constitutional ground. A multipurpose model such as Grok does not.

“In terms of the First Amendment, it’s quite clear Child Sexual Abuse material is not protected expression,” Langford says. “So when you’re designing a system to create that kind of content, you’re clearly operating outside of what’s protected.”

He adds: “But when you’re a general system that users can query for all sorts of things, it’s not so clear.”

The recklessness route

Victims could still nail xAI if they show executives ignored known risks. Reports that Musk ordered looser safeguards may help, yet the path is steep.

“Reasonable people can say, we knew this was a problem years ago,” Langford says. “How can you not have had more stringent controls in place? That is a kind of recklessness or knowledge, but it’s just a more complicated case.”

Global gag orders

Free-speech protections make U.S. action harder. Countries with narrower speech rights are moving faster.

- Indonesia and Malaysia have blocked Grok outright

- U.K. regulators opened a probe that could lead to a ban

- European Commission, France, Ireland, India and Brazil have taken preliminary steps

No U.S. agency has responded publicly.

What’s next

Langford says the ClothOff suit will test whether U.S. courts can shut borderless CSAM businesses. The bigger fight is forcing general AI firms to harden their systems before abuse scales.

“If you are posting, distributing, disseminating Child Sexual Abuse material, you are violating criminal prohibitions and can be held accountable,” he says. “The hard question is, what did X know? What did X do or not do? What are they doing now in response to it?”