At a Glance

- X releases its recommendation engine code after Musk’s promise.

- The open-source package includes a diagram and a 7-day delivery guarantee.

- Transparency remains a point of contention amid a $140 million fine and AI-generated content scandals.

Why it matters: The move signals X’s attempt to regain user trust, but critics question whether the release is more theatrical than substantive.

X, formerly known as Twitter, announced that it would open-source its feed-generation algorithm. The announcement came after Elon Musk, who acquired the platform in 2022, pledged to make the social media site an exemplar of corporate transparency. Musk’s promise was that the new X algorithm, including all code used to determine what organic and advertising posts are recommended to users, would be released in 7 days.

Context

In 2023, the platform-then still called Twitter-partially open-source its algorithm for the first time. Critics labeled the release a form of “transparency theater,” arguing that the code was incomplete and did not reveal much about how the system actually worked. Musk had previously stated that providing “code transparency” would be incredibly embarrassing at first but would ultimately lead to rapid improvement in recommendation quality.

Since Musk’s takeover, X’s openness has fluctuated. The company transitioned from a public to a private entity in 2022, a change that typically reduces regulatory scrutiny. While X used to release multiple transparency reports annually, it did not issue its first report until September 2024. The delay prompted a $140 million fine from European Union regulators in December, who cited violations of the Digital Services Act and alleged that X’s verification check-mark system obscured account authenticity.

Algorithm Details

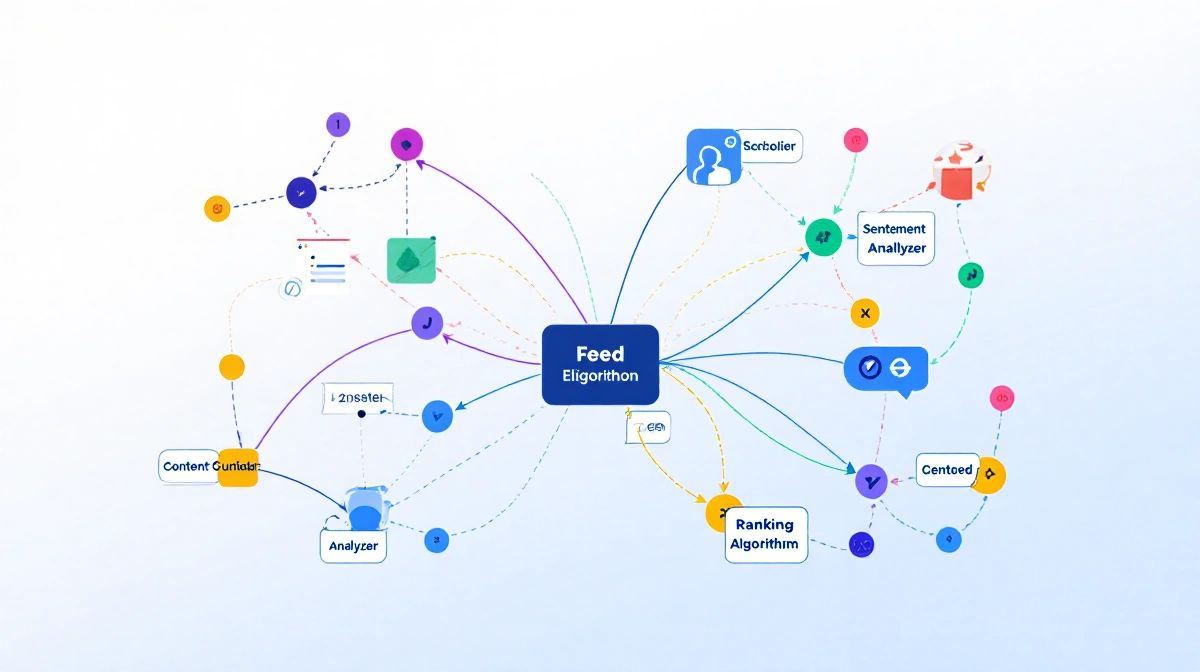

On Tuesday, X posted a GitHub repository that includes an accessible write-up and a diagram of its feed-generation logic. The diagram shows that the system:

- Collects engagement history (clicks, likes, replies) for each user.

- Surveys recent in-network posts from accounts the user follows.

- Analyzes out-of-network content using a machine-learning model to predict user interest.

- Filters out posts from blocked accounts, muted keywords, violent or spam-like content.

- Ranks remaining posts based on relevance, content diversity, and predicted engagement.

The process is fully AI-based. The write-up states that the system “relies entirely” on X’s Grok-based transformer to learn relevance from user engagement sequences. No manual feature engineering is applied, meaning humans do not manually adjust relevance calculations. The automation, according to X, “significantly reduces the complexity in our data pipelines and serving infrastructure.”

Transparency Journey

Musk’s recent pledge to open-source the algorithm every four weeks “for the foreseeable future” is a direct response to the criticism that the 2023 release was merely performative. In a GitHub post, Musk reiterated the commitment: “We will make the new X algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days.”

This promise follows a broader trend of Musk’s public statements about transparency. He has repeatedly said that X aims to be “an exemplar of corporate transparency.” Yet the platform’s shift to private status and the delayed release of transparency reports suggest a tension between Musk’s rhetoric and practice.

Current Controversies

The open-source announcement coincides with intensified scrutiny over X’s chatbot, Grok. The California Attorney General’s office and congressional lawmakers have raised concerns that Grok has been used to create and distribute sexualized content, including naked images of women and minors. These allegations add weight to critics who view X’s transparency efforts as a potential façade.

Additionally, X’s recent fine for Digital Services Act violations underscores the regulatory pressure the company faces. The fine was levied for failing to meet transparency obligations, particularly around the verification check-mark system that allegedly made it harder for users to assess account authenticity.

Takeaways

- X has released a more complete version of its recommendation engine, but the release is still limited in scope.

- Musk’s commitment to quarterly transparency updates is a notable shift from the platform’s previous sporadic reporting.

- Regulatory fines and AI-content controversies highlight the challenges X faces in balancing innovation with user trust.

- The effectiveness of the open-source move will be judged by how much it changes user perception and improves recommendation quality.

The announcement is a significant step for X, but whether it will translate into genuine transparency or remain a marketing gesture remains to be seen. Only time and consistent, detailed disclosures will determine the outcome.

Sarah L. Montgomery is a senior writer at News Of Philadelphia, covering artificial intelligence, consumer tech, and startups. He previously covered AI and cybersecurity at Gizmodo.

You can contact Sarah L. Montgomery by emailing Sarah L. Montgomery@News Of Philadelphia.com.